Let me reassure you, data warehouses don’t really explode. No one is in danger here. But what can explode are hardware and licence costs, data processing and ingestion times, query times, etc.

These problems are usually caused by equipment and software that aren’t designed for the data volumes that you want them to process. We’ve heard it time and again: When it comes to data (big data or otherwise), it’s a challenge to manage the variety, velocity and especially the volume of information produced by companies. And the more time passes, the more serious these 3 Vs become.

A bit of background

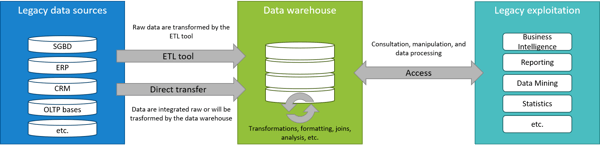

All self-respecting companies have adopted a database management system (RDBMS) to support their operations. This has led to the creation of data warehouses, which companies rely on for their business intelligence, reporting and operations analysis. A data warehouse is an integrated store of raw or processed data, which may come from multiple and potentially varied sources.

Data warehouses differ from traditional databases in terms of performance. The goal is to allow users to query the data, process it, and draw value from it to support business decisions. It all boils down to business intelligence, or helping business managers make decisions.

All this naturally comes at a cost. That's why companies like Teradata, Oracle, SAP, HP, IBM and Informatica have such satisfied shareholders. And then on top there are the ETL tools (extract, transform and load), the data visualization tools and the data mining tools.

In normal processes, data is produced by operational systems that store data in their respective databases. These databases can then be sent to the data warehouse using ETL tools. The warehouse will process, clean and transform the data to leave it in a format that’s useful for reports and analysis. Reports are finally produced using tools that allow you to access, analyze and view data that is stored in the data warehouse.

While systems like this are expensive, they are essential—a fact we’ve known for quite some time. When they’re properly set up, these systems give you a good return on investment because managers are able to base their decisions on data and analysis—and this is generally better than relying purely on intuition.

While systems like this are expensive, they are essential—a fact we’ve known for quite some time. When they’re properly set up, these systems give you a good return on investment because managers are able to base their decisions on data and analysis—and this is generally better than relying purely on intuition.

When the going gets tough

All these operations hog a good portion of the resources available within the data warehouse, RAM, CPU, bandwidth, etc. When operations and activities are stable, there are usually sufficient resources. But when the pace picks up, you run the risk of poor performance.

Let’s consider a retailer that opens a new store while also jumping into e-commerce and merging with a competitor. All this increases the use of their operational databases and puts added pressure on the data warehouse. This ramped up activity leads to three key problems:

1) Everything takes too long

It isn’t unusual to see data transformations take days to complete in data warehouses because the volume of data is excessive or there isn’t enough power. In fact, on average ETL uses 60% of CPU, which doesn’t leave much for data analysis and queries. As a result, it’s impossible to make decisions in real time because the data can’t be processed in real time. Clearly, this isn’t a good thing.

2) It’s a complex evolution

Generally, data warehouses are designed for structured data. That means you can forget all that semi-structured stuff like logs or data from the Internet of Things, geolocation, social networks, multimedia systems, etc. Even a company’s internal structured data sometimes has to be integrated in a simplified way, summarized or aggregated because the model is too complicated. Unfortunately, this reduces the depth of the data, which isn’t ideal for analysis.

3) Costs are increasing

Licencing models are expensive. And the more power, equipment and hard drive space the company adds, the more it has to pay to maintain its operations. And we’re not even talking about modernizations or upgrades. This is purely about maintaining data storage processes when your operations increase. There’s no real added value.

OK, so these are small inconveniences, but the important thing is that it works. And the golden rule in IT is, “If it ain’t broke, don’t fix it.” It’s true that in most cases, the databases aren’t broken. However, they are often ineffective and inefficient. Are those words you want to associate with your IT?

An appropriate solution

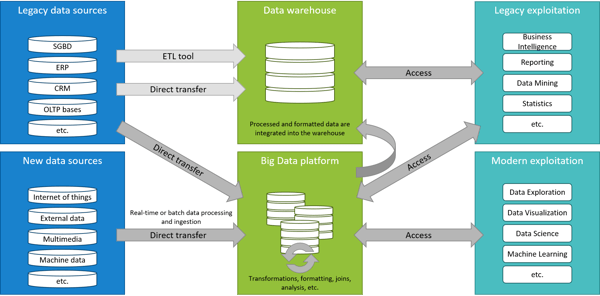

The right solution—one that has proven its success in the world of analytics—is enterprise data warehouse (EDW) optimization. There are several technologies that can address the inconveniences we mentioned above and optimize your data warehouse. These include an Hadoop distribution like Hortonworks, a NOVIPRO partner.

Hadoop is an open-source framework application for the distributed storage and processing of data, regardless of volume or format. While it’s not the only solution on the market, it’s the reference technology for big data. Hortonworks offers a distribution of Hadoop called the Hortonworks Data Platform (HDP) which can be ideal for data warehouse optimization.

This type of technology doesn’t just address the small inconveniences. It even goes further by:

1) Reducing processing times

Data transformation is not carried out directly within the data warehouse. Instead, it happens before even entering it. This means that the warehouse’s power is mostly dedicated to accessing data and running user queries on this formatted data. Users also have a degree of leeway to explore data and run ad hoc queries in the data warehouse or on the big data platform, without having to worry if there’s enough power available.

2) Making integration less complex

The beauty of Hadoop and similar technologies is that there’s no limit on what data you can store or process. You can include data that comes from external sources or that is less structured, without having to worry about making it fit into a rigid data model. If the data proves to be relevant, it’s easy to put it in the right format and then add it to the data warehouse.

3) Reducing costs

If your warehouse has to process fewer jobs and requires less power because it’s not having to spend time integrating data, it’s going to cost less. EDW providers bill based on the number of CPUs, the number of instances, the quantity of stored data, etc. If your company is billed for the quantity of stored data, a common practice is to transfer all old or unused data (which is generally about 70% of it) from the data warehouse to Hadoop. This means that analysts aren’t prevented from accessing data that would be archived and there’s space available in the warehouse for fresh data that will be used more frequently. That’s what we call active archive.

4) Supporting changes in analysis needs

Big data goes hand-in-hand with data science, machine learning, deep learning, artificial intelligence, etc. All these fields require computing and processing power, massive amounts of data and access to tools and languages for data analysis. Most tools and software used in the big data field—ETL tools, data visualization tools and statistical analysis tools—are equipped with connectors to read data stored on Hadoop.

Conclusion

Besides its cost-saving benefits, this form of system optimization can also benefit your business units. Keep in mind that improvements should yield a return on investment or allow you to better meet your business needs—and achieving both is even better!

The digital shift for companies is still in its infancy, but this is a good place to start. It’s a sure value and a quick win. And even if it doesn't end up being used to support business decisions, at the very least it will improve your IT operations and save your company some money.

Nonetheless, it’s important not to overlook all the various other factors and requirements associated with this type of system. Although they are not the express topics of this article, it’s important to keep in mind the challenges linked to data governance, security, user training and awareness, change management and leadership and collaboration needs. After all, you're embarking on an IT project, with everything that this entails.

NOVIPRO can help you ease into this transformation and put you in control of your data warehouse optimization plan. We can start by offering you leading-edge technology from our partner Hortonworks, the leading provider of Hadoop, SAS and IBM platforms. This will ensure you get expert assistance with big data. But before doing that, we will conduct an objective and transparent assessment of your needs. Our goal is to find the right solution and technology to give you a real return on investment.

Our teams of experts are known for their in-depth understanding of the various layers that make up IT systems, including hardware, operating systems, software and how to use everything. This knowledge informs our business solutions, technology solutions and cloud platform. We can also help you deploy and make effective use of solutions like Hortonworks. Do not hesitate to contact us!